Keyword (or keyterm) extraction is the task of automatically identifying the terms that best describe the contents of a document. It is the most important step in generating the Subject Indexes that you can find in the back of many of our publications.

Up until now we’ve been using Sketch Engine to accomplish this. Sketch Engine is proprietary software that offers many pre-compiled corpora to compare your own texts against. For example, we would upload the raw text of a new book and compare it against the British National Corpus, an extensive collection of various English language texts from the late 20th century. Since linguistic publications usually have a vocabulary that is quite different from everyday language, Sketch Engine did a good job at filtering out the terms that were unique to our book – resulting in a list of keyterms such as language acquisition or vowel harmony.

So why fix something that isn’t broken? Because we are committed to using free and open software wherever possible, and also to making things easier wherever possible. We wanted our own, local, open source keyword extraction software. As an intern with Language Science Press, I was tasked with developing that software. It should work just as well while being easier to use and possibly more tailored to the linguistic domain.

Keyword extraction models

The following paragraphs will demonstrate different approaches to keyword extraction. For illustration purposes we’re going to look at terms extracted from a forthcoming volume of papers from the 49th Annual Conference on African Linguistics. This book represents a wide variety of linguistic disciplines while adhering to the latest LangSci standards, and since I was involved in typesetting I’m somewhat familiar with its contents and possible good key terms.

Sketch Engine

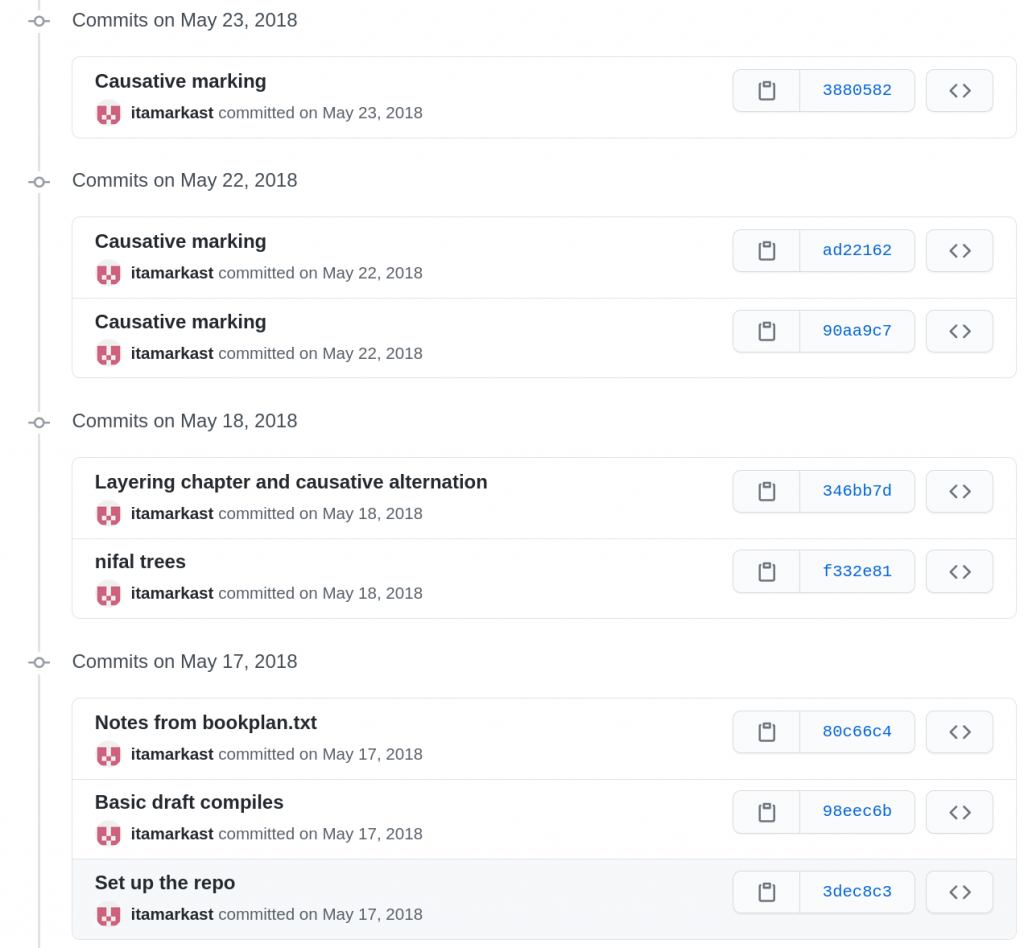

First, let’s take a look at our baseline, Sketch Engine:

Since our books are written using the typesetting system TeX, one important thing to consider is whether to feed the software the raw TeX code, including all the formatting commands and linguistic examples, or a so-called “detexed” version that ideally only contains the actual text.

The current workflow needs a detexed version, so we use the detex software that comes with TeX Live. We upload the resulting text file to the Sketch Engine web interface and compare it against a reference corpus with a few clicks. The resulting list can be exported to a spreadsheet format.

noun class

matrix clause

head noun

dā gbáŋ

amba rc

object agreement

vowel system

focus particle

focus marker

proleptic object

buy book

countable mass

subject position

direct object

atr harmony

external argument

tex localbibliography

sentence final focus

final focus

grammatical role

As you can see on the right, the top 20 results are generally good. Some terms need manual editing (e.g. complete countable mass to countable mass noun, count noun and mass noun – see chapter 14) while others should be removed, namely parts of linguistic examples (dā gbáŋ and buy book) and TeX commands (tex localbibliography) that appear even though we detex’ed the document!

Continue reading